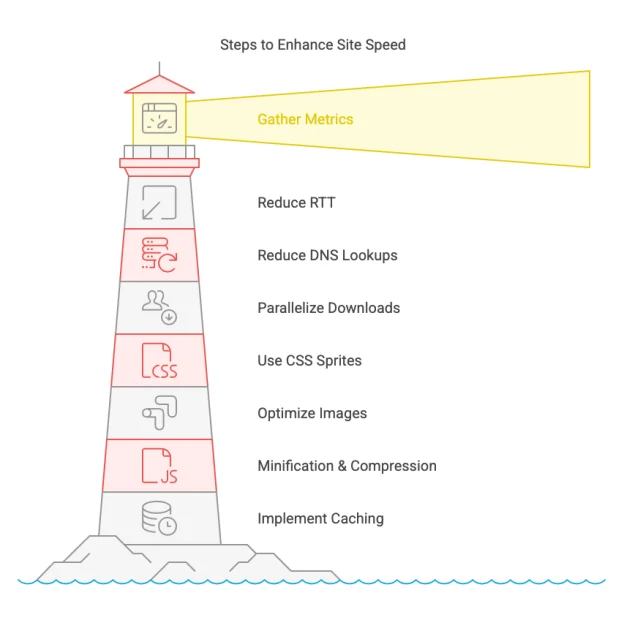

Google have introduced a new tag management service that allows website owners to streamline the process of managing analytics, advertising, and conversion tags on their site.

Anyone working in online marketing knows the challenges of managing code snippets needed for site performance tracking. These tags often require frequent tweaks or changes, and coordination between marketers and webmasters is not always smooth. (more…)